普通线性回归 Linear Regression

一般形式

f(x)=w1x1+...+wkxk+b

需要优化的参数为权重wn与偏置b,通常使用最小二乘法估计模型参数。

一般写作向量形式:

f(x)=wx+b

其中 w=(w1,...,wk)T,x=(x1,...,xk)

优化(最小二乘法)Least Square Method

目标是求出一组参数w,b,使得对于所有输入的预测值与输出值的 MSE 最小。

定义MSE为:

E=i∑n(f(xi)−yi)

优化目标是

w∗,b∗=w,bargminE

一元线性回归的最小二乘法推导

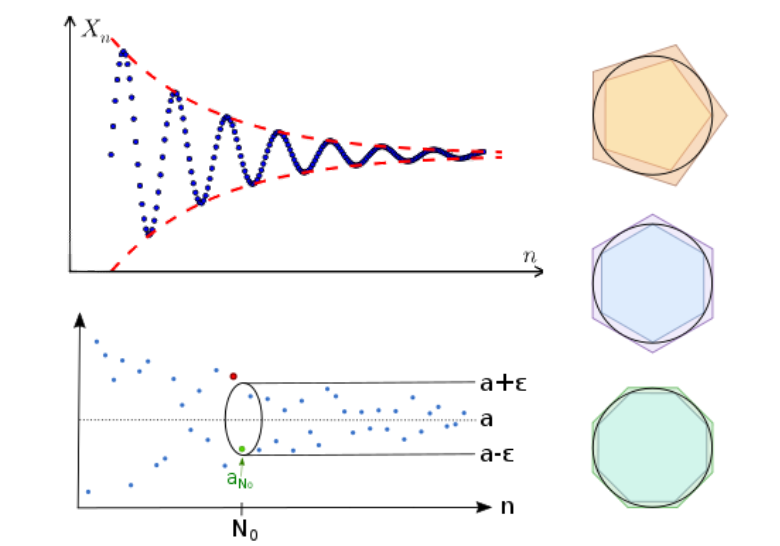

E 在 f(x) 与 y 相交时为 0,而交点左右逐渐增大,显然 E 为一个凸函数。

因为 E 是凹函数,其导数为 0 时 E 刚好为最小值,所以为了求一组 w 与 b 使得 E 最小可以通过对 E 分别求 w 和 b 的偏导并置 0 得到闭式解:

∂w∂E=∂w∂[i∑n(wxi+b−yi)2]=i∑n∂w∂[(wxi+b−yi)2]=i∑n[2⋅(wxi+b−yi)(xi)]=2⋅(wi∑nxi2−i∑nxiyi+bi∑nxi)=2⋅(wi∑nxi2−i∑n(yi−b)xi)(1)

∂b∂E=i∑n∂w∂[(wxi+b−yi)2]=i∑n[2⋅(wxi+b−yi)]=2⋅(nb−i∑n(yi−wxi))(2)

将式 (1) 置 0:

00wi∑nxi2=2⋅(wi∑nxi2−i∑n(yi−b)xi)=wi∑nxi2−i∑n(yi−b)xi=i∑n(yi−b)xi(3)

将式 (2) 置 0:

0bb=2⋅(nb−i∑n(yi−wxi))=n1i∑n(yi)−w(n1i∑nxi)=yˉ−wxˉ(4)

将 (4) 代入 (3):

wi∑nxi2wi∑nxi2wi∑nxi2w(i∑nxi2−xˉi∑nxi)wwww=i∑n(yi−(yˉ−wxˉ))xi=i∑n(xiyi−yˉxi+wxˉxi)=i∑nxiyi−yˉi∑nxi+wxˉi∑nxi=i∑nxiyi−yˉi∑nxi=∑inxi2−xˉ∑inxi∑inxiyi−yˉ∑inxi=∑inxi2−n1∑inxi∑inxi∑inxiyi−n1∑inyi∑inxi=∑inxi2−n1∑inxi2∑inxiyi−∑inyixˉ=∑inxi2−n1∑inxi2∑inyi(xi−xˉ)(5)

将 (5) 向量化以能够使用矩阵运算加速库

详细过程

wwwwwwwwww=∑inxi2−xˉ∑inxi∑inxiyi−yˉ∑inxi=∑inxi2−xˉ(n⋅n1∑inxi)∑inxiyi−yˉ(n⋅n1∑inxi)=∑inxi2−xˉ(nxˉ)∑inxiyi−yˉ(nxˉ)=∑inxi2−nxˉ2∑inxiyi−nxˉyˉ=∑inxi2−∑inxˉ2∑inxiyi−∑inxˉyˉ=∑in(xi2−(xˉ2−xixˉ+xˉxi))∑in(xiyi−(xˉyˉ−xiyˉ+xˉyi))=∑in(xi2−(xixˉ+xˉxi−xˉ2))∑in(xiyi−(xiyˉ+xˉyi−xˉyˉ))=∑in(xi2−xixˉ−xˉxi+xˉ2)∑in(xiyi−xiyˉ−xˉyi+xˉyˉ)=∑in(xi(xi−xˉ)−xˉ(xi−xˉ))∑in(xi(yi−yˉ)−x^(yi−yˉ))=∑in(xi−xˉ)2∑in(xi−xˉ)(yi−yˉ)

令 xd=(x1−xˉ;...;xn−xˉ),为去均值后的 x,yd=(y1−yˉ;...;yn−yˉ) 为去均值后的 y,代入上式:

w=xdTxdxdTyd

多元线性回归的推导

便于讨论,令

X=⎝⎜⎛x11...xn1.........x1n...xnd1...1⎠⎟⎞=⎝⎜⎛x1T...xnT1...1⎠⎟⎞=⎝⎜⎛x^1T...x^nT⎠⎟⎞

w^=(w;b)

E=i∑n(x^iTw^)2=[y1−x^1Tw^...y1−x^1Tw^]⎣⎢⎡y1−x^1Tw^...y1−x^1Tw^⎦⎥⎤=(y−Xw^)T(y−Xw^)

将 E 展开:

E=yTy−yTXw^−w^TXTy+w^TXTXw^

对 w^ 求导得:

dw^dE=dw^dyTy−dw^dyTXw^−dw^dw^TXTy+dw^dw^TXTXw^

由矩阵求导法则可得:

dw^dE=0−XTy−XTy+(XTy+XTX)w^=2XT(Xw^−y)

凸函数证明过程略,将式 (7) 置0

0XTXw^w^=2XT(Xw^−y)=XTy=(XTX)−1XTy

若XTX为非正定矩阵时,可以引入正则项或使用伪逆矩阵,这都会导致出现多个解,使得没法直接求出全局最优解。

广义线性模型

让模型逼近 y 的衍生物

g(y)y=wTx+b=g−1(wTx+b)

g(⋅)中不应该有需要优化的参数。

对数几率回归 Logit Regression

通常用于二分类预测问题,预测 y 为样本x作为正例的可能性,则1−y 为反例的可能性,两者比值 1−yy 反应了 x 作为正例的相对可能性,再对其取对数,得到对数几率 ln1−yy,对数几率回归:

ln1−yyy=wTx+b=1+e−(wTx+b)1

对于二分类的误差,通常不使用MSE而是BCELoss(Binary Cross Entropy)来衡量:

ℓ(y,y^)=y⋅lny^+(1−y)ln(1−y^)

整体误差:

E=−i∑n(y⋅ln(1+e−(w^Tx^i)1)+(1−y)ln(1+e−w^Tx^ie−w^Tx^i))=−i∑n(y⋅ln(1+e−w^Tx^i1)+ln(1+e−w^Tx^ie−w^Tx^i)−y⋅1+e−w^Tx^ie−w^Tx^i)=−i∑n(−y⋅ln(1+e−(w^Tx^i))−ln(1+ew^Tx^i)+y⋅ln(1+ew^Tx^i))=−i∑n(y⋅[ln(1+ew^Tx^i)−ln(1+e−w^Tx^i)]−ln(1+ew^Tx^i))=−i∑n(y⋅ln(1+e−w^Tx^i1+ew^Tx^i)−ln(1+ew^Tx^i))=−i∑n(y⋅ln(1+ew^Tx^i11+ew^Tx^i)−ln(1+ew^Tx^i))=−i∑n(y⋅ln(ew^Tx^i+ew^Tx^i1⋅ew^Tx^i(1+ew^Tx^i)⋅ew^Tx^i)−ln(1+ew^Tx^i))=−i∑n(y⋅ln(1+ew^Tx^i(1+ew^Tx^i)⋅ew^Tx^i)−ln(1+ew^Tx^i))=i∑n(ln(1+ew^Tx^i)−y⋅w^Tx^i)

目标为:

w^∗=w^argminE

E为凸函数的证明略。最优化模型可以通过梯度下降、牛顿法等求得最优解。

对数几率回归的梯度下降推导

y^=1+e−(wx+b)1

=i∑n(ln(1+ew^Tx^i)−y⋅w^Tx^i)

E 对 wk 求偏导:

∂w∂E=i∑n(∂w∂ln(1+ewxik+b)−∂w∂yi⋅(wxik+b))=i∑n(1+ewxik+b1⋅ewxik+b⋅xik−yi⋅xik)=i∑n(1+e−(wxik+b)11⋅e−(wxik+b)1⋅xik−yi⋅xik)=i∑n(e−(wxik+b)+11⋅xik−yi⋅xik)=i∑n(yi^−yi)⋅xik

E 对 b 求偏导:

∂b∂E∂b∂E=i∑n(e−(wxik+b)+11−yi)=i∑n(y^−yi)

梯度下降迭代:

wk:=wk−ηi∑n(yi^−yi)⋅xik,k=1,....,d

b:=b−ηi∑n(y^−yi)